What if I told you that you don’t need to be a coder to build something as advanced as a self-driving AI? Sounds crazy, right? Well, I recently built a 2D self-driving car from scratch using Neural Networks and Reinforcement Learning—all with zero prior coding experience.

What started as an experiment in curiosity quickly became a 16-hour training marathon, filled with trial and error, AI crashes, and unexpected breakthroughs.

And the result? A fully autonomous AI driver, trained entirely through trial and reward, evolving from a reckless, off-road disaster to a smooth, efficient racer.

This newsletter is a detailed breakdown of that journey—the problems I faced, the solutions I found, and how AI learned to drive just like a human would.

🎯 The Goal: Teaching AI to Drive on Its Own

The objective was simple:

✅ Train an AI model that could learn to drive a 2D car on a virtual racetrack.

✅ The AI should stay on track, avoid going off-road, and maximize performance over time.

✅ No hardcoded rules—the AI should figure everything out itself.

But to do that, I first had to define what my AI needed.

🛠 Defining the AI’s Needs

1️⃣ A Virtual Environment Where the AI Could Learn

The AI needed a realistic racetrack where it could drive, make mistakes, and improve over time.

2️⃣ An AI Algorithm That Could Learn From Its Mistakes

Instead of hardcoding logic for every possible scenario, I wanted the AI to learn by experience—just like a human driver does.

3️⃣ A Way to See What the AI Was Doing in Real-Time

I needed a way to track the AI’s decisions, rewards, and mistakes visually.

4️⃣ A Performance Tracker to Measure Progress

To understand how well the AI was improving, I needed a system that tracked training data, rewards, and driving efficiency over time.

With these four requirements, I now needed the right tools to bring them to life.

📦 The Tech Stack: Dependencies That Made It Possible

Instead of writing everything from scratch, I used pre-built AI tools (called dependencies) that helped me speed up development.

Here’s what I used:

🛠 Gymnasium (Box2D) → Created the virtual racetrack for the AI to train in.

🛠 Stable-Baselines3 → Provided the Reinforcement Learning (RL) framework to train the AI.

🛠 OpenCV → Allowed me to track the AI’s visual input and overlay performance stats.

🛠 Matplotlib → Helped me visualize training progress and AI improvements.

Once everything was installed, it was time to code.

💻 The Coding Phase: The First Big Challenge

I started by asking ChatGPT to generate the training script for me.

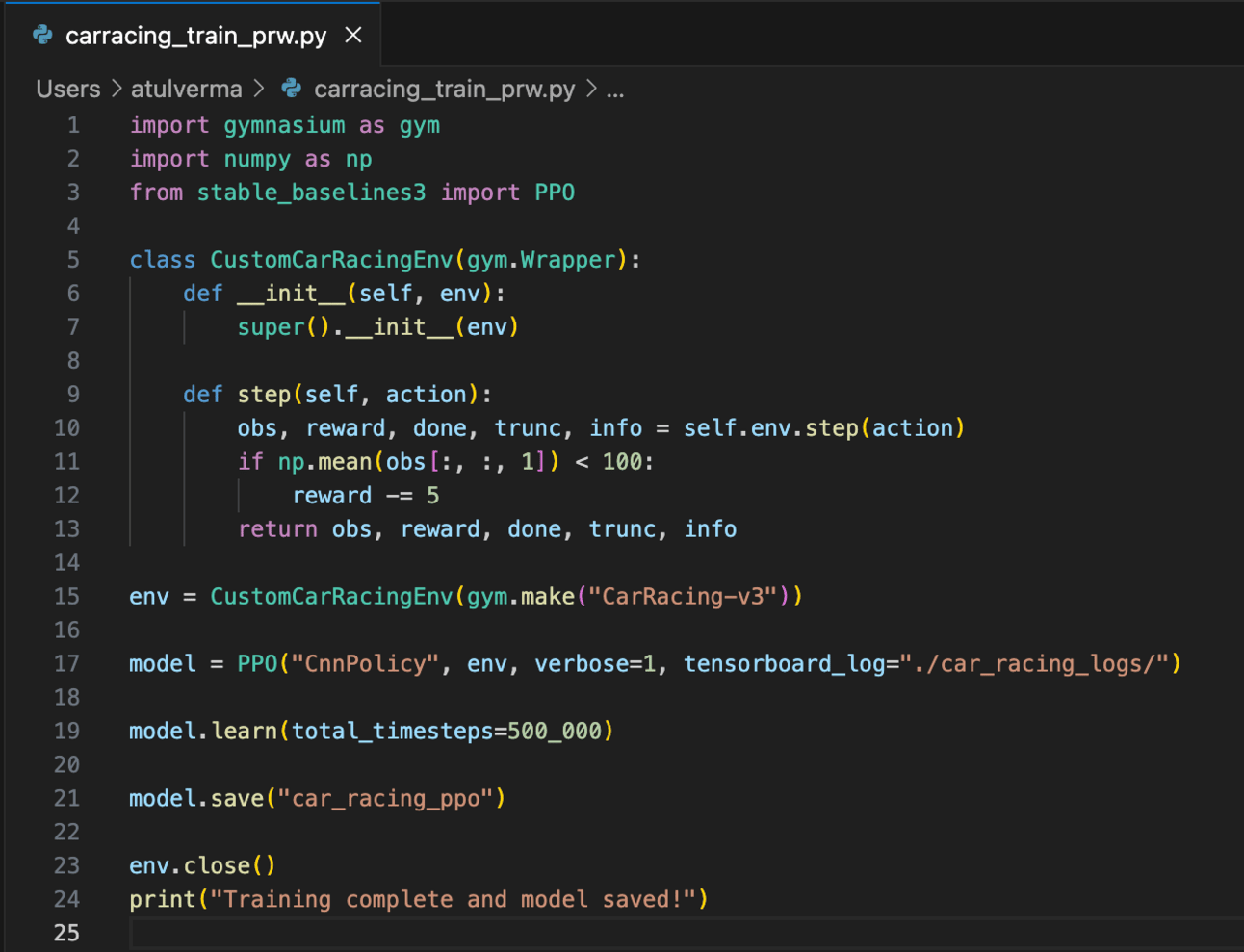

24 Lines, and no understanding of what’s going on for my Training Module

I went through each line, and with the help of ChatGPT, broke it down step by step.

🚧 Challenges I Faced (And How I Solved Them)

🛑 Problem 1: The AI Kept Driving Off-Road

At first, the AI had no idea what it was doing. It randomly drove off the track, crashing into everything.

💡 Solution: I modified the training environment to penalize the AI whenever it touched the grass. This forced it to stay on the road.

if np.mean(obs[:, :, 1]) < 100: # If too much green is detected reward -= 5 # Apply penalty

This simple change immediately made a difference!

🛑 Problem 2: The AI Wasn’t Learning Fast Enough

Even after training for 100,000+ timesteps, the AI wasn’t improving much.

💡 Solution: Increase training time.

I extended training to 500,000 timesteps, which allowed the AI to experiment more and refine its decisions.

🛑 Problem 3: Tracking AI’s Learning Progress Was Difficult

There were no real-time stats to show how well the AI was driving.

💡 Solution: I used OpenCV to overlay real-time performance stats on the screen.

Now, I could see the AI’s score, speed, and whether it was staying on track while it was driving.

🧠 How the AI Actually Learned to Drive (In Simple Terms)

The AI used a Neural Network—which works a lot like the human brain.

At first, it made random moves with no understanding of what worked.

Every time it stayed on the track, it received a reward.

Every time it went off-road, it got a penalty.

Over millions of attempts, the AI recognized patterns—figuring out the best way to steer, accelerate, and navigate corners.

This was all possible thanks to Proximal Policy Optimization (PPO)—a reinforcement learning algorithm that:

✔ Balances exploration vs. exploitation so the AI doesn’t just repeat the same moves.

✔ Learns gradually to avoid sudden bad decisions.

✔ Refines its driving strategy over time.

🎬 The Final Results: Before vs. After

It took a total of 16 hours to train—but the AI had finally mastered driving.

🏆 Final Thoughts: What I Learned From This Project

AI is incredibly powerful. Watching a machine learn by trial and error was mind-blowing.

Reinforcement learning works like human learning. The AI trained just like a person learns to ride a bike—through practice and feedback.

Even without coding experience, you can build complex AI systems. With tools like ChatGPT, Gymnasium, and Stable-Baselines3, anyone can get started.

If you found this journey interesting, let’s connect!

Want to follow my projects? Subscribe, follow, or reach out. 🚀

Until next time,

Atul Verma 🚗💡